Breaking Development is a conference about the future — as it should be. But as I reflect on the past couple of days of talks about going beyond the desktop, there’s one thought I can’t get out of my head:

We need to push the limits at both ends of the technology spectrum.

I’ll come back to that. First, I wanted to summarize the major themes that stood out for me at the San Diego conference on 22-23 July:

- Forget about device classes like phone, tablet, laptop, and desktop. Instead, let ergonomics, input methods, and multiple-screen experiences guide design decisions.

- We need to start thinking about designing for wrist devices (smart watch) and eye devices (Google Glass), as well as wall devices like TVs (think Xbox One) (see my notes on Luke Wroblewski’s talk).

- The biggest development challenges are going to come from new input methods like voice control and gesture devices like Leap Motion (see the slides from Jason Grigsby’s talk).

- The problem is that we can’t reliably detect screen sizes and input methods (keyboard, mouse, touch) to adapt content appropriately.

- The good news is that we can look forward to advances in CSS3 that allow for full control over content layout (see the slides from Divya Manian’s talk).

- So, how should we adapt to these changes?

These are important themes and I got a lot value out of the talks. We should absolutely explore the boundaries of new input methods like voice and gestures, and play around with experimental CSS features that let us take more control over content layout. This is how we move technology forward, and where we get to take advantage of the latest hardware and software standards.

But I’m a bit worried that we tend to push into the future so fast that we abandon fields of existing technology to whither and die before they’ve reached their full potential. It’s fine to experiment with the new and exciting, but innovation doesn’t happen only in the forward direction — lateral jumps often result in really innovative ideas.

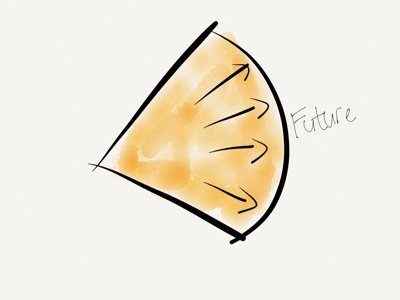

I’m not sure if this is the best way to illustrate what I’m trying to say, but here’s what it looks like in my head. If we focus only on pushing the boundaries of the future, we end up with a pretty small “area of innovation”, for lack of a better word:

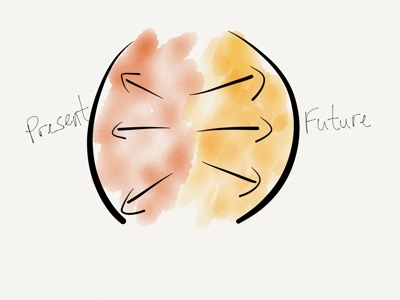

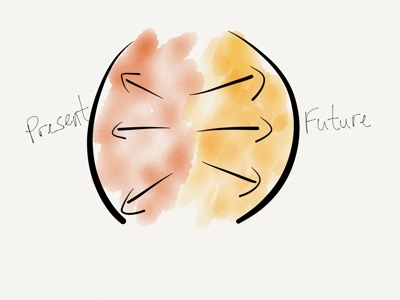

But if we also work on pushing the boundaries of what we already have, we increase that area by a large amount:

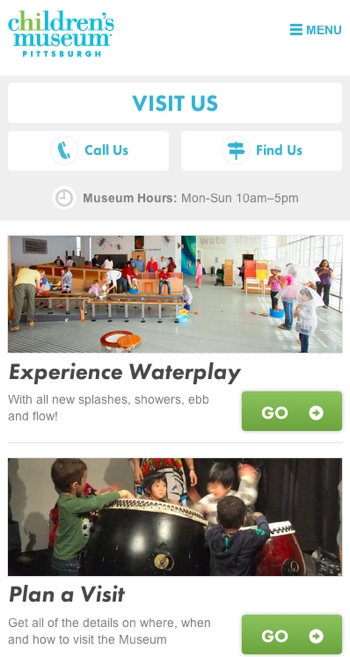

One example I keep coming back to is USSD. It’s a low-end mobile technology that got completely skipped over in the U.S., but is widely used all over Africa since it’s supported from the most basic feature phones all the way to the iPhone. Projects like MAMA (using mobile

technologies to improve the health and lives of mothers in developing nations) and messaging platforms like Vumi already use USSD in really innovative ways. We’re not talking about these low-end product solutions enough, and that’s a shame.

There are really two sets of questions we have to explore when designing and developing beyond the desktop. First, what can we do at the far edge of technology? How can we push the boundaries of the future? How can we go further?

But we also have to ask, what can we do at the low end of technology? How can push the boundaries of the present? How can we do more with what we already have?

As much as what I learned at Breaking Development will help evolve our company’s processes for future-friendly development, the most surprising outcome for me is how much it got me thinking about the potential to use existing technologies to solve the problems we run into while we push into the future. This is why I got more out of Breaking Development than I expected, and why I can highly recommend it to anyone who designs and builds digital interfaces.