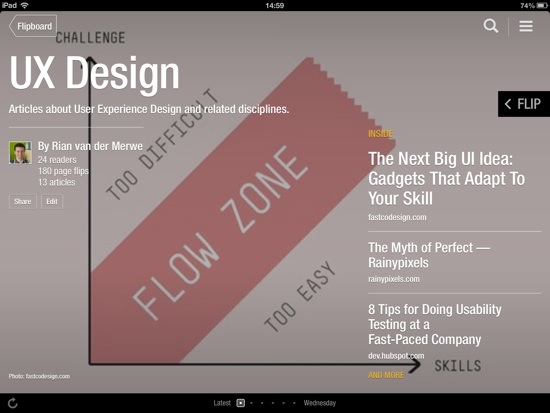

Our beloved industry is pretty wary of buzz words. And by “our industry” I mean User Experience Designers — although we can’t even agree on what to call ourselves, so that’s another problem, I guess. Anyway. Debates over terms like skeuomorphism, flat design, and “No UI” have given us a strong skepticism for fancy words. That’s mostly refreshing, but it can also be a handicap if we end up dismissing valuable ideas because we don’t like the terms that describe those ideas.

I would argue that the term Lean UX fits into this last category. It’s easy to dismiss as just another bandwagon fad, but now that I’ve read through Jeff Gothelf and Josh Seiden’s Lean UX: Applying Lean Principles to Improve User Experience I believe that it’s a valuable framework to help us understand where the UX industry is headed — and how to do our jobs better.

Whether you want to call the theory and techniques discussed in this book “Lean” or just “How we work” doesn’t matter as much, in my opinion. What is important is that we understand the benefits of moving towards a more iterative, outcomes-based design approach, while letting go of some our reliance on classic design deliverables. In the introduction to the book, the authors sum up the main reason for this proposed change in design approach:

But the fault is not with the designers, or the engineers, or even the executives. The problem is the systems we use to build companies. We are still building linear organizations in a world that demands constant change. We are still building silos in a world that demands thorough collaboration. And we are still investing in analysis, arguing over specifications, and efficiently producing deliverables in a world that demands continuous experimentation in order to achieve continuous innovation.

The book then describes how to build better products through real collaboration. I say real because Jeff and Josh don’t just say, “you should work together!” They detail a number of practical techniques for working together better, as well as case studies to show how it works in real-world situations. And underlying all of this are the three principles of what they define as Lean UX:

- Removing waste from the design process to move away from heavily documented handoffs to a process that creates only the design artifacts needed to move the team’s learning forward.

- Improving the efficiency of the “system” of designers, developers, product managers, quality assurance engineers, marketers, and others in a transparent, cross-functional collaboration that brings nondesigners into the design process.

- Shifting mindsets away from relying on “hero designers” to divine the best solution from a single point of view, in favor of using rapid experimentation and measurement to learn quickly how well (or not) ideas meet the company’s goals.

Some UX designers will read this book and say that this is how they’ve always worked. That might be true — it’s true for our agency as well, to some extent. But I still found it extremely helpful to have a concrete framework for the work we do, combined with solid reasoning about the benefits of this approach. I also picked up some great execution ideas for techniques we use already — like persona templates and design studio facilitation.

The only part of the book that might be a bit controversial is the discussion of how Lean UX fits into Agile development. Jeff and Josh argue that the long-accepted idea of Sprint 0 or Staggered Sprints (making sure that design is always a sprint ahead of development) doesn’t work long-term:

However, this model works best as a transition. It is not where you want your team to end up. Here’s why: it becomes very easy to create a situation in which the entire team is never working on the same thing at the same time. You never realize the benefits of cross-functional collaboration because the different disciplines are focused on different things. Without that collaboration, you don’t build shared understanding, so you end up relying heavily on documentation and handoffs for communication.

They propose a very interesting alternative that makes a lot of sense (I won’t spoil it for you), but some of the concerns I’ve raised before about Agile UX remains. I’m not sure if any Agile UX techniques allow for enough leeway to test/research different variations of a product idea, or if it just streamlines the iteration process to get you to a local maximum faster.

In summary, Lean UX is a great overview of what an effective UX process should look like. There’s a good balance between theory, practical advice, and case studies. This makes it a valuable resource for those new to the field, but it also gives experienced UX practitioners a framework to structure and communicate the work they do every day. Highly recommended.

Buy Lean UX on Amazon.