I’ve been spending lots of time recently thinking about and working with my team on what I can only refer to as “how we should spend our life force.” If that sounds weird, hold on to your hats because I’m going to make it even weirder by (and I apologize in advance) throwing a 2×2 matrix into the mix. So. Come with me in this post as we discuss how our biggest strength as product managers can easily become our biggest weakness, and how we can protect our health and sanity in the midst of all the turmoil in our companies and the world at large.

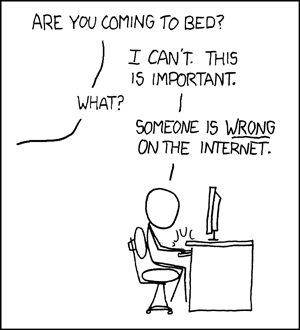

First, without getting too deep into the metaphysical or get myself in trouble about things I don’t know enough about, I do think it’s import for each of us to make conscious decisions to spend our “life force” on things that make us generally feel fulfilled and bring us closer to the person we want to be. That can take many forms—a bike ride, a fun side project, a bad action show (The Night Desk is so bad good!), an interesting problem at work… those can all be good ways to spend our life force! Being on the internet too long, on the other hand, is rarely that:

This is true at a macro level in our daily lives, but also when we zoom into how we spend our time at work. PMs in particular have this annoying habit where we tend to gravitate towards the wicked problems—a trait that makes us good at what we do, but can also be self-defeating because when we spend too much of our time depleting our life force, burnout eventually finds us.

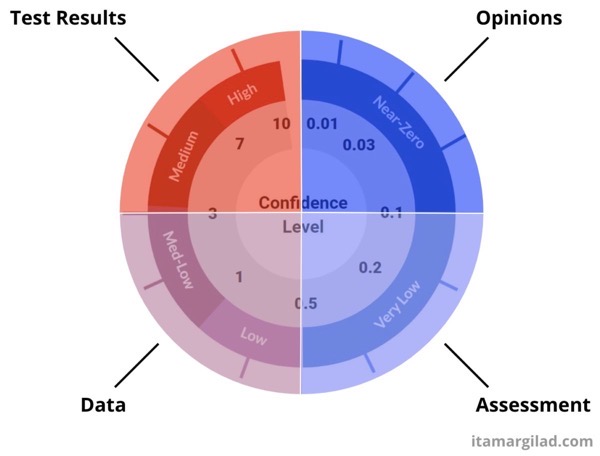

So 2×2 matrix incoming! I think of the way we spend our life force as PMs on two dimensions: the difficulty of the task, and our likelihood of influencing the task’s success.

(more…)