I recently started a series on software development and the role of the Product Manager. If you haven’t already done so, it might be a good idea to read Part 1 (Overview) before continuing. In this post I’d like to write about the first step in the development process, namely Product Requirements, and the various sources of input that go into deciding what to build and how to improve your product.

As I started writing I realized the topic is just too big for one post, so I’m going to split it up into a few different posts:

- Part 2 (this post) will be about user needs as an input to product requirements.

- Part 3 will be about business needs and technology needs as inputs to product requirements.

- Part 4 will be about the PM’s role in the Product Requirements phase.

Even though the focus here is not on what kind of product/service your company should develop and sell, I do want to briefly mention product/market fit, because it is probably the most important aspect to figure out to be a successful business. No one talks about this better than Marc Andreesen, so I wanted to quote from one of his (now deleted) blog posts:

The quality of a startup’s product can be defined as how impressive the product is to one customer or user who actually uses it: How easy is the product to use? How feature rich is it? How fast is it? How extensible is it? How polished is it? How many (or rather, how few) bugs does it have?

The size of a startup’s market is the the number, and growth rate, of those customers or users for that product.

The only thing that matters is getting to product/market fit. Product/market fit means being in a good market with a product that can satisfy that market.

With product/market fit figured out (no easy task), and a workable product to start with, it’s time to get serious about building and improving the product — and that’s the stage where this post starts. At the heart of a good product roadmap stands a Product Manager that is able to strike a balance between user needs, business needs, and technology needs. So let’s look at each of those in detail, starting with user needs.

User needs

Identifying user needs is at the core of a user-centered design process, and it involves gathering feedback from the users of your product through a variety of methods to uncover unmet needs and opportunities for improvements. This is called user experience research (UER), and there are plenty of resources available on the topic, so I’m just going to provide an overview here.

First, it’s important to mention the fundamental difference between market research and user experience research:

- Market research seeks to understand the needs of the market in general, and is generally more focused on areas like market fit, brand perception, advertising and pricing research, and ways to uncover perceptions of the company and its products.

- In contrast, user experience research focuses on users’ interaction with the product. It relies heavily on observational techniques, since users are notoriously bad at describing their experiences or predicting their behavior.

There are many ways to classify different UER methodologies, but my preference is for a classification that lines up the different methods with the outcomes required by different phases of the product development process. In that approach, there are three classes:

1. Strategic research

Strategic research is done with the goal of coming up with new product ideas and business opportunities, or preliminary design explorations during the product discovery phase to help with the brainstorming of design solutions. Here are some of the methods that fall into this class:

- Ethnography. A technique long used in Anthropology that has only recently found its way into the toolkit for research on interactive products. It involves going to users’ homes or offices, and observing how they use your products in their natural environment. It allows the researcher to see users in action where they feel most comfortable. Ethnography is all about observing and listening. It is generally not task-based like usability studies, but follows a loose interview script with the goal of uncovering needs and insights that users are unable to articulate. I have an extreme positive bias towards ethnographic research, especially in contrast to focus groups (of which I’m not a fan at all, but that’s a subject for a different post). I have seen first-hand how ethnography sparks innovation when it shows how users make up their own workarounds for the limitations of software, which in turn reveals opportunities for product improvements. When it comes to exploratory methods to help with product strategy and roadmaps, there simple is nothing better than a good ethnography study.

- Participatory design. Another favorite, this technique brings users together to solve design problems in a way that would make sense to them. The purpose is not to take users’ designs and implement them, but to find out which elements and activities are most important to them. My preference is to do this in diads, where 2 users work together on a design. This forces both participants to be active, and you learn as much from their conversation with each other as with the designs they put together. The usual process is to provide users with a blank page or basic framework, cut-outs of various elements that could go on a page, and watch and listen as they make trade-offs and design decisions on what should go on the page based on how they would use the product. This technique especially helps guide interaction design because it gives a glimpse into users’ process as they go through the site.

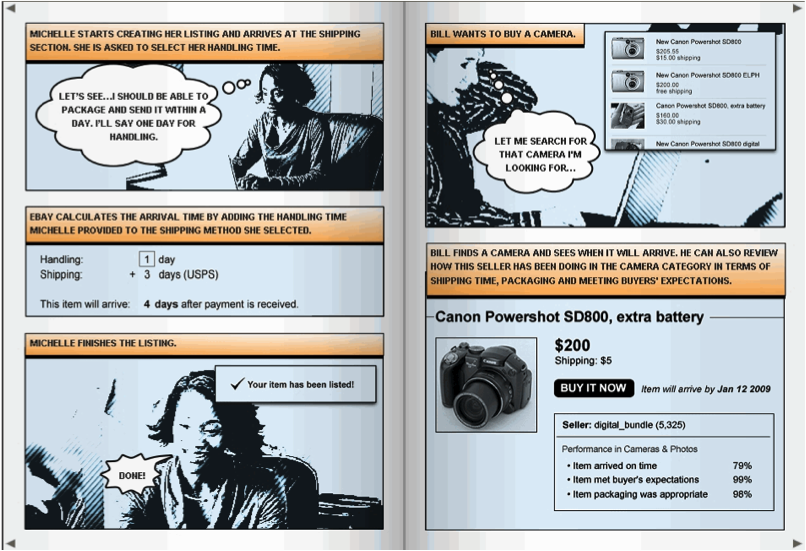

- Concept testing. This is a good way to gather feedback on an approach before wireframes or mockups are created. Storyboards/comics are great artifacts to use for this kind of testing, since it takes design out of the process, and gathers feedback from users on the process you intend to design. Although mostly done in-person with 6-8 users, there are also great tools for large-scale concept testing, like Invoke. Below is an example of a storyboard one of our researchers at eBay used during early concept testing for one of our products:

Further reading on strategic research:

- Ethnography in Industry: Objectives?

- How to set up and run participatory design sessions, analyze data and present results effectively

2. Design research

Design research includes most of the methods that are associated with traditional user experience research. Its role is to improve and refine existing designs in various levels of fidelity. Some of the methods include:

- Usability testing. This is, of course, the most well-known UER method, and what most people default to for any kind of design feedback. Task-based usability testing in a lab is a fantastic tool, but it has become a little bit too much of a “when all you have is a hammer…” method. Usability testing should be used to uncover usability problems with a proposed interaction design. It should not be used for feedback on visuals, finding out which design users prefer, or uncovering new product ideas. There are other techniques that are much better suited to that task. Usability testing involves 1-on-1 sessions with users where the researcher observes them as they perform assigned tasks. This kind of direct observation is a great way to understand what users would actually do on the site (as opposed to what they say they would do), as well as to uncover the reasons why they do what they do.

- RITE testing. Rapid Iterative Testing & Evaluation (RITE) is a very specific form of usability testing, but I wanted to call it out because it is my preferred way of testing. It involves a day or two of focused usability testing, followed by a design cycle where the feedback is immediately injected into the design before the next round of testing. Doing several of these cycles quickly means your outcome isn’t a bloated Powerpoint deck with a bunch of recommendations; your outcome is a better design that incorporated user feedback in real time. As the debate continues on how UX can be more involved in Agile development, this technique should become increasingly important since it fits in perfectly with the Agile mindset.

- Desirability studies. Invented by researchers at Microsoft (yes, really – see this Word article where they outline the approach), this has become another favorite technique for me if I want feedback on the visual aspects of a site (not so much interaction), and specifically which visual approach users like more when there is more than one alternative. It involves a survey to a large number of users where they are asked to rate one of the proposed design alternatives using a semantic differential scale. The survey is done as a between-subjects experiment, meaning each user sees only one of the proposed designs, so that they are not influenced but the other design alternatives. The analysis then clearly shows differences in the visual desirability of the proposed design alternatives.

Further reading on design research:

- Complete beginner’s guide to design research

- Usability testing overview

- Do’s and Don’ts of usability testing

3. Assessment research

Often neglected, the role of assessment research is to measure the impact design decisions have made, and to evaluate success and continued areas that need improvement. This requires larger sample sizes to ensure the ability to compare before/after metrics with statistical significance, so these methods are mainly quantitative in nature. Methods include:

- Product surveys. Everyone hates surveys, but it remains an effective way to assess how design changes are affecting user perceptions of the site. Different from most market research surveys you receive (and delete) in your inbox, these surveys deal specifically with user perceptions of the interaction and design. It’s not always effective as standalone research studies since there is so much bias in surveys with their <5% response rates, but if you can run surveys over time, and control the sample so that the bias remains the same, it can be a very good tool to ascertain the success of your design changes.

- Online user experience assessments. Another favorite, tools like Keynote allows you to gather real-time click-through data as well as subjective user feedback. It uses a proxy or a browser download to give users tasks on a site, and ask them questions about the experience while their activity is being tracked. This often produces a mountain of data, which can be quite overwhelming and not always effectively used if there is not enough time/resources available to analyze the data.

- Analytics. Web analytics need no introduction, but there are several tools specifically aimed at user experience, with my favorite being Razorfish‘s Advanced Optimization tool for web forms. By placing a small piece of JS on form pages, it gives you a mountain of data on how users interact with forms, including what error messages they receive, how much time they spend in each field, the last field they were on before closing the form, scrolling data, and the list goes on. It’s a great way to improve form conversion. I honestly don’t know why they don’t market this thing more.

So that’s an overview of user research methods — there are many more, but I wanted to focus on the ones I’ve found especially useful in my own work. The real power of user research starts to happen when you combine methods and triangulate results to come up with a product strategy that takes a variety of quantitative and qualitative insights into consideration. If you’re interested to learn more about this, Using Multiple Data Sources and Insights to Aid Design is a good post on the topic.

There are so many resources available on user research — your best bet to stay on top of the latest happenings in the field is to subscribe to UX Booth and UX Matters.

In part 2b, I’ll talk about the other two inputs to Product Requirements: Business Needs and Technology Needs. I’ll also discuss how all of this fits into creating Product Requirements, and what the Product Manager’s role is in all of it. Stay tuned!